Passing multichoice exams without studying

One of my psychology lecturers required our class to buy the textbook Writing for Psychology (O’Shea et al., 2006). He referred to it as “The Bible”. The book talked about things like how to structure a written argument and format a research proposal, and it was the key to passing undergraduate psychology assignments.

The otherwise serious textbook devoted half a page to multiple-choice exam technique, with advice such as “pick the longest answer” and “if all else fails, choose C”. Like all good humour, it was hard to tell if the authors were joking or not. And the information was not referenced, even though the book has an entire chapter on how to reference information. But it got me wondering if there really was any pattern to multichoice tests that could be exploited to give the test taker an edge.

I decided to test my idea by throwing a couple of machine learning techniques at some sample questions.

Best case scenario would be to find a model that was both simple enough to use in real life (“always choose the longest answer”), and interpretable enough to reveal something about the psychological biases of a test writer (“lies come to us more easily that the truth”). But I’d settle for p < 0.05.

The code and a presentation of the results are on GitHub.

Finding a Dataset

Rather than overfitting a model to a particular style of exam (“half the answers to Prof. Smith’s behavioural economics tests are C”), I wanted my results to be applicable to multiple choice tests in general. So I was looking for a dataset of multichoice questions and answers that was typical of a wide range of tests.

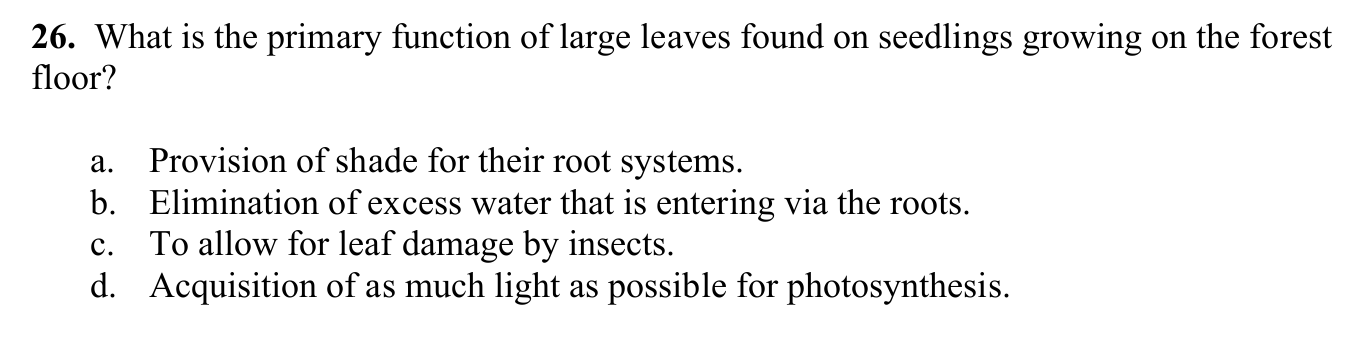

I settled on the Australian Science Olymiads, which I remember taking at school. Here’s the sort of question they ask:

The organisation publishes PDFs of past exams and solutions on their website. When I downloaded the data in 2014, there were 400 questions and 1800 answers, spread over 19 exams. The exams cover three subjects: biology, chemistry, and physics. This ought to be broad enough to reduce picking up patterns in a single subject.

I was also hoping to improve individual representativeness by ensuring the exams were written by different people. To begin with, it seems unlikely that a single person would write the exams for three diverse subjects over size years. But we can go one step further by looking at the author field of the PDF documents: the 19 papers show 9 unique authors, with several papers listing no author at all. Now that’s no guarantee of distinct authors, I could be detecting the person who compiled each document, or set up the computer. But short of full on writing style analysis (which apparently is called stylometry), I’m pretty confident that the results won’t pick up on the biases and preferences of a single person.

Data Wrangling

In an extreme display of naivety and laziness, I decided to parse the question and answer data out of the PDFs programmatically, rather than doing it by hand. What should have been a couple of hours of data entry ended up taking me several days of programming, and I still ended up with a fairly large error rate.

First I tried parsing the PDF binary, but was bitten by the “presentation over semantics” philosophy of PDFs. The biggest problem was getting the answer labels to correspond with the answer text. The extracted text would look something like this:

a.

Provision of shade for their root systems.

b.

c.

Elimination of excess water that is entering via the roots.

To allow for leaf damage by insects.

Acquisition of as much light as possible for photosynthesis.

d.

which is parsable, but there were about five different variations of this in each document requiring special parsing logic. All to render an identically-formatted result. So I supplemented the parsing method with optical character recognition, taking whichever result didn’t throw an error and favouring OCR to break conflicting results.

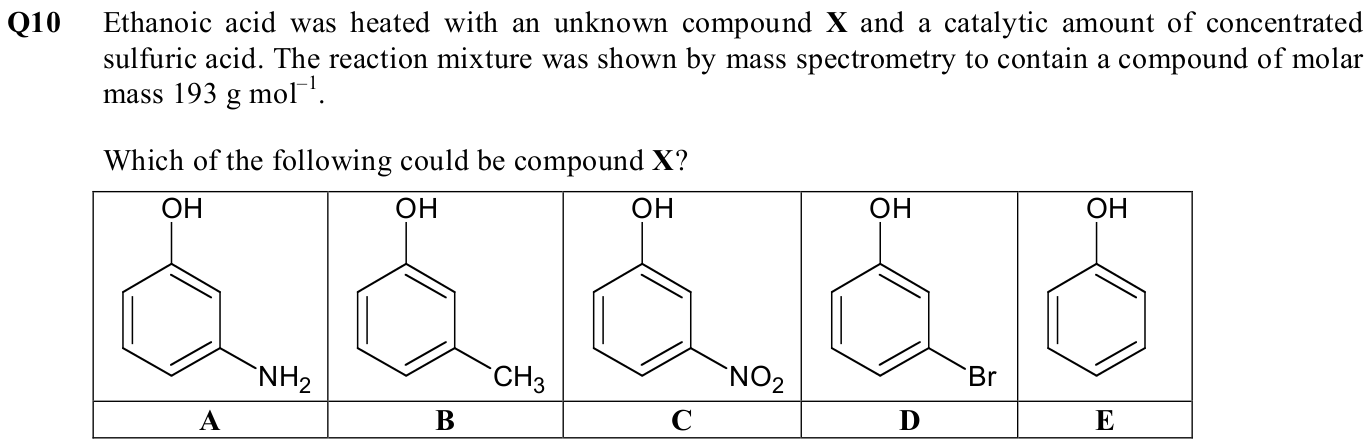

Finally, there were lots of questions that were too abstract to be parsed by any method:

In total, I managed to parse 80% of the questions into a structured digital format. About 10% were impossible to parse, and the remaining 10% is where my program failed.

Feature extraction

For each answer I extracted 32 features from both the answer text and the question text, such as

- Number of words

- Average word length

- Inverse question logic: the question is worded something like: Which of the following statements is INCORRECT?

- Value rank: for numerical answers, the size of the number relative to the other answers

I avoided adding metadata about the exams, like the subject or the author. Missing values were replaced with mean values from the other questions. As labels I had whether each answer was correct or incorrect, from the provided answer keys.

Modelling

A regression analysis showed no significant correlation for any one parameter, nor for all the parameters as a whole. This likely rules out the existence of a simple rule-of-thumb model.

So next I tried a neural network with a single hidden layer of 16 nodes. I suspected there would be some nonlinear interactions between the features I selected - in particular a feature of the question interacting with a feature from the answer. Neural networks can automatically tune for higher order features, however using a neural network practically rules out a psychologically interpretable result.

Results

If the labels were chosen randomly, you’d expect to score 21%. The neural network had a test score of 26% averaged over ten runs. The model is 5 percentage-points better than random choice. So if you had to guess 20 questions in an exam, you would get one more correct with the neural network model that randomly guessing.

That’s a lot of effort for a small increase. As far as time allocation goes, it’s probably not an improvement on studying. And also not an improvement on actual cheating. However the results seem significant, despite the small effect size, suggesting that there are exploitable biases in multichoice exams.

Hindsight

I did this project a few years ago, and have learnt a lot about this sort of thing since then. Here’s what I’d do differently today:

- Manually define nonlinear features. The nonlinearity was my motivation for using a neural network, but there were only a few interactions that I had in mind. I might have been better off just assigning the ones I could think of, which would allow for use of simple interpretable methods like regression.

- Use a NLP approach. Rather than looking for relationships between features and labels, another approach I’d like to try is treating the data as two different corpuses (correct and incorrect answers) and trying to classify unseen answers into one or the other. You’d miss out on some of the more complicated features like numerical value and anything to do with the question, but it might capture writing style better than I could design into a feature.